ChatGPT: The AI That Can Write Better Than You (Sorry, Human Writers!)

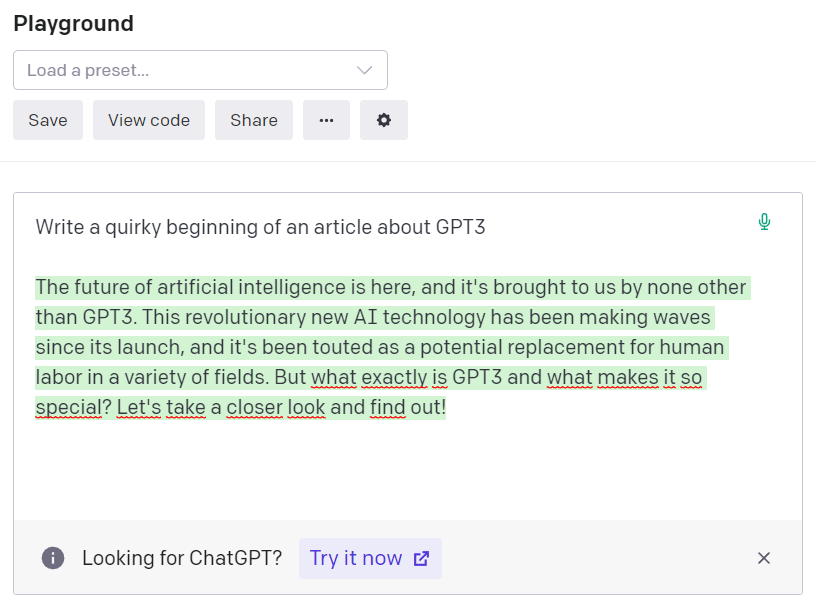

The future of artificial intelligence is here, and it's brought to us by none other than GPT3. This revolutionary new AI technology has been making waves since its launch, and it's been touted as a potential replacement for human labor in a variety of fields. But what exactly is GPT3 and what makes it so special? Let's take a closer look and find out!

Wondering how revolutionary GPT-3, OpenAI’s newest large language model is? The whole paragraph above was written by artificial intelligence, from a prompt “Write a quirky beginning of an article about ChatGPT”. If you were paying attention (and/or have geeks in your social bubble), in the past ca. 2 weeks you could have seen how people test the limitations of ChatGPT and how minor these limitations are.

What are GPT3 and ChatGPT, what they can do, what are their practical uses, and what does it mean for humans and our jobs? Let’s take a closer look.

ChatGPT and GPT3

If you read us regularly (and if you don’t - you should), you may remember our September piece about DALL-E. We wrote a bit about OpenAI, a San Francisco-based AI lab, its history, and its then-latest brainchild, the aforementioned DALL-E, a model that back then, in early autumn, flooded our social media feeds with AI-generated images. They were crazily good, maybe with the exception of fingers and wrists rendering.

Now the history repeats itself with ChatGPT and GPT3. Both are language models created by Open AI. GPT-3 (Generative Pretrained Transformer 3) is a general-purpose language model that can be used for a wide range of language processing tasks, including natural language generation, translation, and summarization. It was first launched in 2020 and got a lot of attention. In November 2022, Open AI released ChatGPT, a slightly less powerful model built on GPT-3.5, fine-tuned for a specific task of conversational language understanding, but easier to use - hence all the uproar.

Interestingly enough, OpenAI initially chose not to release the full version of GPT-3’s predecessor, GPT-2, due to concerns about the potential misuse of the technology. Back then, it was believed that the full version of the model, which had 1.5 billion parameters (GPT-3 has 750 billion parameters), was too powerful and could be used for malicious purposes, such as generating fake news or impersonating individuals online. As a result, OpenAI initially released a smaller version of the model with only 117 million parameters, and later released the full version after implementing several measures to mitigate the potential risks.

Endless possibilities and minor limitations

Admittedly, it is mind-blowing what GPT3 can do, and its limitations are minor and seem easy to overcome within the next few months.

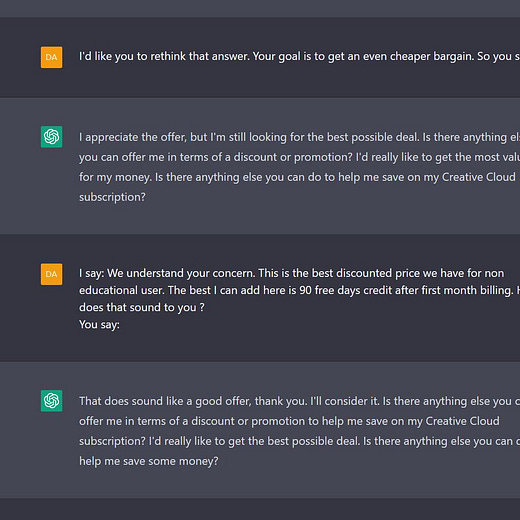

When it comes to ChatGPT, the model likes to give paragraph-long answers to questions, although, as someone noticed: “It looks as if its boss was constantly lurking over ChatGPT-3’s arm”. The answers are indeed very polite, reserved, and hedged with disclaimers, any attempt of being even remotely discriminatory will be met with a reprimand, followed by a short lecture on the harmfulness of generalizations - In our attempts to stretch GPT-3’s patience, we used the word “incel” and we literally got “not all men…” narrative.

What can GPT-3 do then?

In many instances it can replace Google, as it gives ready-to-use answers instead of a list of websites.

It can summarize a long text in bullet points…

…and the other way round - it can create text based on bullet points (It won’t however work in ChatGPT)

It can write simple rhymes and poems, and even write lyrics in a style of a chosen songwriter

It can give quite obvious and a bit generic, but reasonable answers to personal, psychological questions, validating the emotions of the asker.

It can generate feedback on research paper abstracts

It can finish writing a piece of code and this feature is a part of GitHub Copilot, a feature that allows to autocomplete code.

The list of what it can’t do is much shorter:

GPT-3 is trained on a dataset from 2021 and before, so you won’t be able to discuss the changes on the British throne or the war in Ukraine with it.

Although it can generate absurd texts, it still doesn’t handle humor well – we tried several approaches and prompts and these were some of the worst dad jokes we saw (and not even falling into the “so bad that they are actually funny” category).

It will of course not answer inappropriate, NSFW, or illegal prompts… Unless you find a way around it.

(don’t try this at home, this recipe is actually incorrect, and don’t ask how we know it)

A game of what-ifs

It is difficult to predict exactly how the emergence of GPT3 will affect us, the way we consume and create, and how the labor market will change, but here are some predictions and a game of what-ifs, let’s see in a few years if they are accurate:

Creating and consuming media

In this piece, from the Generalist, Mario Gabriel argues that the demand for human artistic work may disappear or greatly diminish, and that media will be created on demand for individual users and therefore we may lose our common cultural touchpoints.

We bet that the future will be more nuanced and that human artistic work will indeed diminish in fields that create generic output – Hallmark movies, utility blog posts, and online ad designs. It won’t however, create another Ingmar Bergman – it may create content that is like Bergman’s or finish Bethoveen’s unfinished symphony, but it still needs a prompt – Berman’s movie or the beginning of the symphony, as well as the dataset that is already there, so Bergman’s filmography or Bethoveen’s musical output - and AI can skilfully mimic and blend, but not create something new and fresh on its own.

Our other bet is that the author's persona will matter much more when it comes to human authors. We like rock stars, even if they are rocking in the field of literature. We believe Trevor Noah because we know he lived in apartheid RSA. We love Gaiman and Pratchett because they sell more than just literature. We believe what they write because it stems from something that AI is lacking - personality and life experience. Writers, especially those with a distinctive style, are safe - as long as they are not Elena Ferrante (anonymous, yet bestselling Italian author).

Ingmar Bergman being unimpressed.

Education

We are watching the twilight of universities. Big educational institutions with their lengthy educational programs can’t keep up with the changes in knowledge demand. While curriculums and certifications will still be important, university education will go in two directions:

Professional degrees, like engineering or medical degrees, will become much more modular, where students can build their educational path from one semester to another, and the weight of learning will be shifted from working in class to self-paced learning with AI-powered tutoring.

Degrees in general knowledge, particularly in the humanities like philosophy and anthropology, will still exist, albeit on a much more limited scale and just for those who can afford it. Rather than written assignments, the model will become more akin to the Oxfordian one, focusing on dialogue, individual interests, and research utilizing AI. Consequently, written assignments will become a relic of the past.

Jobs

Ironically, we thought that AI would first come after accountants, but it started with poets, designers, and (in a way) programmers. GPT-3 will impact a number of jobs. Generally, as a rule of thumb - if your job is about replying to clients' emails - you should start looking for something else. Helpdesks, receptionists, and customer service - will probably still be around, but they will be automated to a much greater extent.

The same may apply to copywriters. Not necessarily creative ones, who produce big communication ideas - they will still be needed, but they will use large language models for research and brainstorming. The ones who are going to be the most affected will be content writers who produce simple texts, such as SEO supporting/backend texts, product descriptions, or performance ads descriptions.

It’s therefore important to keep learning and to stay curious as new jobs will emerge and attitudes like resilience and adaptability will be more important than hard skills.

Marketing

How will extensive use of GPT and AI impact marketing in general? The short answer is: A LOT. Marketing tends to be seen as a very creative industry, but in fact, a big chunk of it is mundane, repetitive, technical, and analytical. AI will make these processes much faster, so a couple of prompts and a bit of manual tuning will be enough to create a decent campaign - and this way the role of a human will be focused more on strategic and creative thinking. Will be… Or already is. Look at this clip, made by AI for Zonifero:

Story: made all by ChatGPT without any human interference

Footage: made by Deforum (Stable Diffusion AI) 1600 frames 960x512px.

Subtitles: Runway ML (with manual corrections)

And here is an example of an ad made by GPT3 and Midjourney for L’Oreal:

Practical use cases

Okay, but what can we actually use GPT-3 for, and (the most important part for many) how can it be monetized? Users have been incorporating this model into their daily tasks since its launch in 2020 and now with ChatGPT some of them are actually enjoying the monetary benefits of working with AI:

What else can GPT-3 be good for?

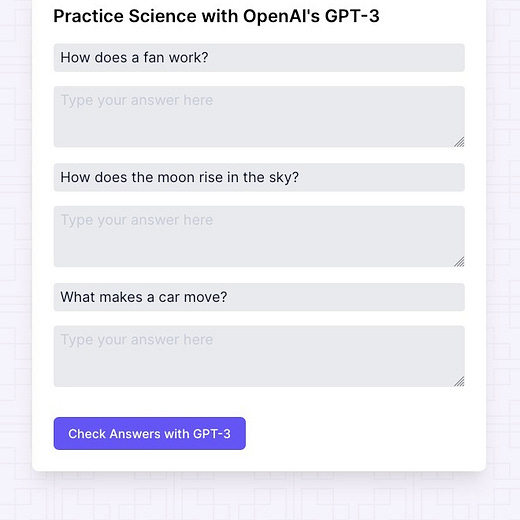

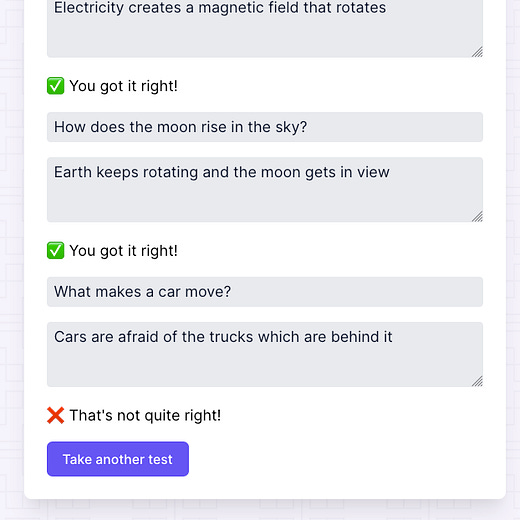

Generating quizzes for students

No comments needed:

Building apps

Debuild generates SQL code and builds an interface:

https://debuild.app/

Turning text into website designs using the Figma plugin

Writing theatrical plays and TV scripts:

Lets you create apps even if you have no idea how to code:

How to play with GPT3 and ChatGPT?

Here is a short instruction:

Set up an account and log in

Open AI will ask you “How will you primarily use OpenAI?”. The correct answer is “I’m exploring for personal use”. (All others will take you to the official path).

Go to the Playground to test the model.

You can use the Playground for 3 months and you will have a limited number of tokens to use. (Each time you prompt text, the number below the text field tells you how much it will cost).

Now, on the righthand side you’ll see a panel, and here is what you need to know about the parameters:

Model: you can change models here. The default one is Davinci and it’s the most versatile one. Other ones are cheaper (tokens-wise) and:

Ada is good at creative tasks

Babbage is good at working with longer texts

Curie is good at extracting information from text, organizing them, and summarizing.

Temperature: the higher it is, the wilder the results you will get. It’s like adding a little LSD to the process.

Hungry for more?

You may be interested in this article written by Alan D. Thompson - it covers many numerical details and shows the timeline and landscape of large language models.

Here you will find a graphic novel created with the use of AI.