Dallying with DALL·E 2

This story could have started like a great fairy-tale: a long time ago in a valley far, far away, a mighty lord, a wise wizard, and a brilliant merchant decided to unite their powers to create a superhero, who would then save humanity. But it’s not a fairy tale, it's a postmodern world in late 2022 and fairy tales don’t develop as they used to anymore. So, let’s rephrase it:

It’s December 2015, San Francisco is blinking with Christmas lights, people rush for their last-minute holiday gifts, and Elon Musk, Sam Altman, and Ilya Sutskever are about to open their brand new non-profit lab that pledges to ensure that artificial general intelligence (AGI) is created safely and would benefit all humanity evenly. Beautiful idea, isn’t it?

Open AI’s building in San Francisco’s Mission District

(Image by HaeB, CC Share Alike 4.0 International)

OpenAI’s humble poverty lasted exactly 4 years before the company got a $ 1 000 000 boost from Microsoft and turned for-profit in a widely criticized move. Since its foundation, OpenAI became famous mostly for producing text-generating and music-generating models - GPT3 and MuseNet / Jukebox, that could create their own music or try to finish existing songs. Is it a Grammy-worthy attempt? Check for yourself:

And DALL·E 2? DALL·E 2 is the last brainchild of the controversial lab, an AI system that is able to transform text into image.

Wall-E and Dalí walk into the bar…

If “DALL·E 2” sounds familiar to you, then you are right - it’s a mashup of WALL·E -the name of the 2008 Pixar movie and its main character- a self-aware robot, and Salvador Dalí, the famous Spanish painter, hailed as the father of surrealism in art. And indeed, the grotesque creations of the system have something in common with the famous Spaniard’s paintings.

DALL·E 2 generates images based on a textual description. Do you want to see what a very buff teapot would look like? Say no more, your sick imagination is the limit for the system’s (re)creativity:

In April OpenAI decided to let a carefully selected 100 000 users from the invite-only list play with the model. And this is exactly when the Internet burst with weird, grotesque, and bizarre images that told us as much about AI’s capabilities as it did about their authors’ imagination.

On June 20th the model entered a beta phase, turned into a paid service, and a million more users from the waitlist were allowed to purchase a 15$ subscription that buys 115 credits that could then generate images in a 1 credit to 1 image ratio.

Hey, DALL·E 2, draw a politician

How does it work? To put it very simply, DALL·E 2 creates images. You can ask the model to create an image of a nervous content manager frantically writing an article to meet a deadline, akhem, and the outcome is surprisingly accurate (it skipped empty coffee cups stacking on the desk though):

You could also ask for : “Girl in the park, reading a book, Matisse style”

Or “Teddy bears working on new AI research on the moon in the 1980s” :

Or for “Dali, autoportret”

“Okay” - you may ask - “but how does it REALLY work, and what is INSIDE?” DALL·E 2, like any machine learning model, analyzes huge volumes of labelled data to find common features they share. How exactly it’s done is not entirely clear, that’s why the black box metaphor is often used when talking of AI: it's a mechanism that takes input and gives output, but no one knows what happens inside. Let’s take a computer vision API: after analysing a bazillion images that feature a beige, fluffy object with black dots, it can be one of two things: these objects were labelled either a blackberry muffin or a chihuahua.

With generating an object, the AI black box works in a similar manner - it analyzes bazillions of objects it has in its database and spits out something that’s an average of all these objects taken together. That’s why DALL·E 2 early users reported the technology’s alleged bias - when you ask for an image of a billionaire, in most cases the person in the picture will be white. If you ask for an image of a caregiver - the person will be a woman: mostly (well, it’s complicated) not because the technology is biased, but because of the input dataset it works on.

In July OpenAI announced that the dataset is going to be changed so that it can reflect global diversity, but in fact, making tweaks seems to be a hopeless battle at times. Shortly after OpenAI made an amendment to address the gender bias, users started reporting an increased number of female Mario Bros images generated.

In late August DALL·E 2 creators launched Outpainting: the functionality that allows to continue an image beyond its original borders:

What can and can’t DALL·E 2 do?

There are tasks that DALL·E 2 is acing: adding, altering, or replacing images in existing pictures. It can even create a set of clothes in videos:

It has, however, a hard time creating words in pictures to the point where some people can’t help feeling that DALL·E 2 generated its own gibberish language (spoiler alert, it didn’t).

Although DALL·E 2 interpretative skills are impressive, there are some limitations. First of all - there are those imposed by OpenAI - users can’t generate images of famous people and photorealistic images of people in general. There are also filters that prevent violent or NSFW image generation (although some Redditers are pretty disappointed with the latter).

Secondly - it has some problems with text comprehension. DALL·E 2 understands words, like “panda” or “latte art”, but sometimes has a hard time making sense of what you want. “Panda latte art”? Or “Panda making latte art”? What’s the difference? Who knows? Surely not DALL·E 2 - which was pointed out in a humble-brag paper created by Google, who happens to work on DALL·E 2 competition, Imagen. Google’s model seems to have nailed the task:

Source: Google’s research

But Imagen is not the only competition for DALL·E 2. Parti is another model created by Google, based on a different architecture than Imagen, that seems to beat its older brother in creating images based on comprehensive descriptions.

There is also Stable Diffusion - another text-to-image model publicly launched by a collective of researchers from RunwayML, LMU Munich in late August; EleutherAI and LAION - led by Stability AI, a British AI studio, in cooperation with HuggingFace and CoreWeave. The interesting thing is that Stable Diffusion is free, downloadable, runs locally on your graphic card or in the cloud. and the NSFW restrictions will be lifted, which supposedly will bring smiles to Redditors’ faces. Even if it’s not the best model out there, its availability may make it the most popular one.

Another model worth mentioning is Midjourney, and apparently, it’s good at generating good-looking, high-resolution images out of simple prompts. Compared to DALL·E 2 it’s like searching for an image on Google Graphics vs searching for an image on Pinterest.

Last but not least, there is also DALL·E 2 mini, recently renamed Craiyon, a lightweight tool that allows users to create images that are a bit wonkier than those generated by DALL·E 2, but due to the tool’s low usage cost - also more accessible.

Watch this video to find out about the differences between DALL·E 2, Midjourney, and Stable Diffusion:

Which AI is better? Dall-e 2, MidJourney, Disco Diffusion, and Stable Diffusion. Comparing AI.

Ownership

AI-generated art causes new questions to arise: who owns it? Is it the AI creator or the user? OpenAI states clearly in its inaugurational post for DALL·E 2 launch:

Starting today, users get full usage rights to commercialize the images they create with DALL·E 2, including the right to reprint, sell, and merchandise. This includes images they generated during the research preview.

Does it mean you now own that image of a cat in space you just created? No! It takes some digging into OpenAI’s Terms & Conditions to find out that the company still owns your generations (so the output, input being a “prompt” or “upload” - as DALL·E 2 can generate image from text or alter already existing images), but just gives you a license to use it in any way you want:

Ownership of Generations. To the extent allowed by law and as between you and OpenAI, you own your Prompts and Uploads, and you agree that OpenAI owns all Generations (including Generations with Uploads but not the Uploads themselves), and you hereby make any necessary assignments for this. OpenAI grants you the exclusive rights to reproduce and display such Generations and will not resell Generations that you have created, or assert any copyright in such Generations against you or your end users, all provided that you comply with these terms and our Content Policy.

“Artificial Intelligence in court in a copyright case” - these sound like some really mighty Harry Potter spells.

There is also a question of how many of the generations can be copyrighted at all, given that copyright can apply only when we talk about “original works of authorship”. When it comes to short prompts it’s really hard to talk about creative authorship.

Implications

OpenAI not claiming copyrights, opens a window of opportunity for those who want to monetize AI-generated art. At the same time, it causes panic amongst artists and visual creators. What will change with models getting more proficient at generating art, who should look for a new job and who can rest easy? Let’s see.

First of all, AI art for sale is already here and there seems to be a new job emerging: an AI whisperer, who knows the spells that can make AI produce exactly what we want. A new prompt-selling marketplace, PromptBase lures those who discovered their AI-enchanting skills: Sell your prompts on PromptBase and make money from your prompt crafting skills. Upload your prompt, connect with Stripe, and become a seller in just 2 minutes.

Whose jobs are in danger then? Likely re-creative visual jobs. If your work is mostly adding and removing objects, doing packshots, making this thing smaller, that thing bigger, changing font to Comic Sans, per client’s wishes, and adding a pineapple in the foreground, etc - then boy, have we got news for you - AI will be able to meet commercial standards once it gets more proficient and gets rid of glitches over time. Stock photographers, packshot photographers, (some) graphic designers, storyboarders, retouchers - beware!

Designers and photographers with more “human” and creative approach are safe: event photographers focused on recording memories, creative designers, art directors, comic artists, and illustrators with their own unique style and added value can keep on working and AI may even become their ally for conceptual planning and raw sketches.

The rise of text-to-image AI is good news for small businesses, all those who could not afford a designer and made their designs in Canva from royalty-free images sourced from Unsplash. To all those industries and professionals who need a raw visualisation, like interior designers, landscape architects, TV producers, DALL·E 2 & co will provide mock-ups good enough for brainstorming and ideation.

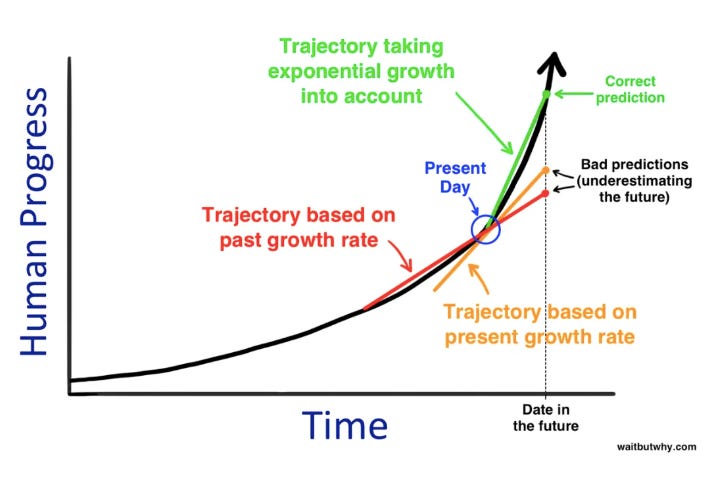

But that’s just the beginning. We are in the moment of history when the snowball effect of technology is getting more and more visible, and happens much faster:

It might sound scary for now, but so was the automobile in 1910, when Farmers’ Anti-Automobile Society of Pennsylvania lobbied for changes in the state law:

Source: Bottlenecks: Aligning UX Design with User Psychology, David C. Evans.

1. Automobiles traveling on country roads at night must send up a rocket every mile, then wait ten minutes for the road to clear. The driver may then proceed, with caution, blowing his horn and shooting off Roman candles, as before.

2. If the driver of an automobile sees a team of horses approaching, he is to stop, pulling over to one side of the road, and cover his machine with a blanket or dust cover which is painted or colored to blend into the scenery, and thus render the machine less noticeable.

3. In case a horse is unwilling to pass an automobile on the road, the driver of the car must take the machine apart as rapidly as possible and conceal the parts in the bushes

Just like we don’t keep Roman candles in our cars, in 5, 10, 50 years from now we won’t be scared of creative AI - it will be interwoven in our daily lives and will redefine how we think about creativity and work.

New chapters, like this, might be full of new possibilities, if we stay curious enough, antifragile and open to what’s new. This is how we grow, even if it may feel unnatural or artificial (pun intended) at first:

Are you ready for dallying with Dalle now?